REDMOND - In a fundamental shift for enterprise storage architecture, Microsoft has officially rolled out native NVMe support for Windows Server 2025, effectively retiring a legacy translation layer that has governed Windows storage operations for over a decade. The update, released as part of the October cumulative patch, promises to unlock double-digit percentage gains in performance and efficiency for data centers worldwide.

The move addresses a long-standing bottleneck in how the Windows operating system communicates with modern high-speed storage. By bypassing the traditional SCSI (Small Computer System Interface) emulation, Microsoft reports that the new native path can deliver up to 80 percent higher Input/Output Operations Per Second (IOPS) and slash CPU overhead by nearly half. While currently an opt-in feature, industry experts view this as a critical evolution for hyperscale computing and virtualization environments where every processor cycle counts.

The End of the SCSI Translation Era

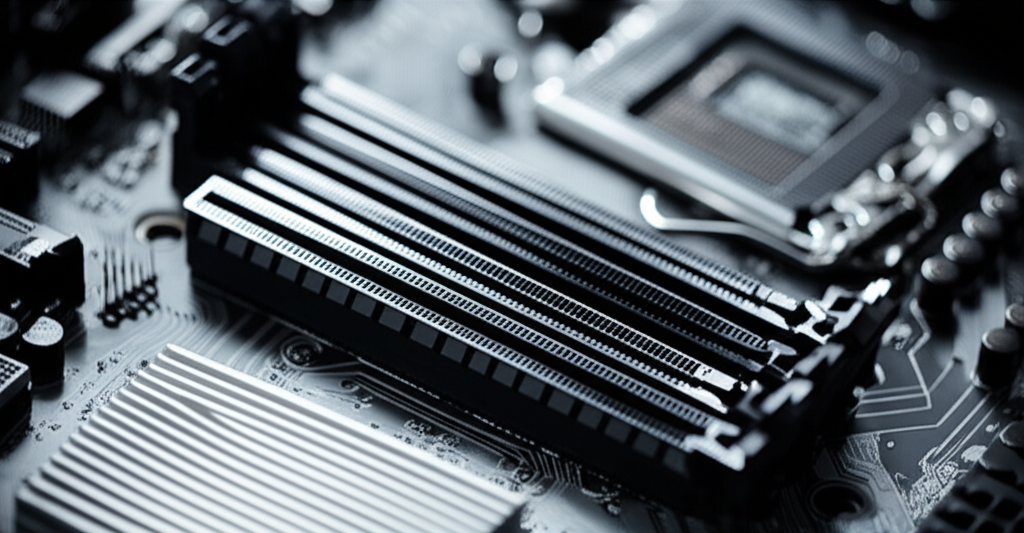

To understand the significance of this update, it is necessary to examine the architectural debt that has accumulated since the introduction of NVMe (Non-Volatile Memory Express) roughly 14 years ago. Until now, Windows Server treated modern NVMe solid-state drives much like older spinning hard disks. It utilized a compatibility layer that translated NVMe commands into SCSI commands-a protocol originally designed for mechanical drives connected via cables.

According to reports from Tom's Hardware, this translation process introduced latency and processing overhead. The system had to act as a translator between the operating system and the drive, consuming CPU resources to convert languages that were fundamentally different. The new native NVMe stack removes this middleman entirely.

"Native NVMe removes translation layers that previously routed NVMe I/O through SCSI, reducing processing overhead and latency." - TechRadar

Microsoft Community Hub details that the new architecture enables "streamlined, lock-free I/O paths" that drastically reduce round-trip times for storage operations. By exposing NVMe semantics directly to the kernel, the operating system can finally utilize the full parallel processing potential of modern SSDs.

Performance Metrics and Benchmarks

The performance implications of this update are substantial. Data published by 4sysops and validated by Microsoft's own internal testing indicates that the native stack offers an approximate 45 percent reduction in CPU cycles per I/O compared to Windows Server 2022. This efficiency gain allows the CPU to focus on computing workloads rather than managing storage traffic.

Furthermore, random read performance has seen a dramatic uplift. Reports indicate that 4K random read IOPS can increase by up to 80 percent. For database servers, high-frequency trading platforms, and AI inference engines that rely on rapid data retrieval, this represents a near-generational leap in throughput without the need for hardware upgrades.

Deployment: Opt-In for Now

Despite the benefits, Microsoft is proceeding with caution. The feature, delivered in update KB5066835, is not enabled by default. As noted by Neowin, administrators must manually enable the functionality via FeatureManagement overrides in the registry. This "opt-in" status suggests that Microsoft is prioritizing stability, allowing organizations to validate the new driver (nvmedisk.sys / StorNVMe.sys) in test environments before broad deployment.

Community discussions on Reddit highlight that while the deployment process is straightforward-involving specific PowerShell commands to modify registry keys-it does carry risks. Users on the Guru3D Forums and Tom's Hardware have discovered that this feature can technically be unlocked on consumer versions of Windows 11 as well, though it remains unsupported and potentially unstable for general consumer use.

Strategic Implications for Enterprise

Datacenter Economics

The reduction in CPU overhead is arguably more critical for businesses than raw speed. In a cloud environment, CPU cycles equal money. If a server uses 45 percent less CPU to handle storage requests, those cycles can be repurposed to run more virtual machines or containers on the same hardware. This increases the density of workloads per server, directly impacting the bottom line for cloud providers and private data centers.

Hardware Lifecycle Extension

This software-defined performance boost may also alter hardware refresh cycles. Organizations may find that existing NVMe-equipped servers have untapped headroom that can be accessed simply by updating the OS, potentially delaying the need for expensive hardware upgrades.

Outlook: The Path to Default

While currently reserved for manual activation in Windows Server 2025, the trajectory is clear. The "Storage Revolution" touted by Microsoft indicates that this native NVMe path will eventually become the default standard. The technology industry is witnessing the final decoupling of modern flash storage from the constraints of mechanical disk protocols.

As validation data accrues from early adopters, it is expected that Microsoft will push this architecture down to the consumer level officially, bringing enterprise-grade storage efficiency to workstations and gaming PCs. For now, however, the focus remains on the server room, where efficiency gains are measured in millions of dollars.